The Crowdmark grading workflow generates a deluge of data owned by our university customers. Each question posed to a student by an instructor in a course, the student’s response, together with the associated scores, annotations and text comments left by the grading team are all captured and archived for detailed analysis. Our team is developing secure data sharing pathways, analysis and visualization tools, and academic integrity protection processes, all designed to empower the universities we serve to extract insights from their data on Crowdmark. I’d like to highlight some recent advances and provide a forecast into the improvements and insights you can expect from Crowdmark over the next few months.

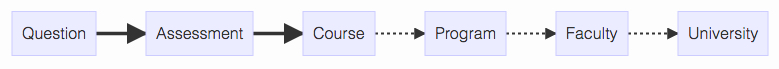

Crowdmark’s analytics strategy aims to generate insights into learning performance (what, when and how students learned) and instructional team performance (timing, accuracy, quality of scores and feedback) by analyzing the data generated by teams using our platform. Our approach considers the data along a nested sequence of contexts starting with a single question, to a particular assessment, to the layer consisting of a single course, to a complete program and ultimately to a whole university or system.

Crowdmark has deployed robust data analysis features at the question and assessment layer. The Crowdmark team is working to enrich these data streams and generate new insights from data at the course layer.

Question Level

The grading workflow on Crowdmark naturally captures data at the question level. Crowdmark displays the histogram of scores on each question and calculates and shares basic descriptive statistics. Crowdmark also measures the grading speed for each question.

Assessment Level

Crowdmark automatically sums total scores and generates a histogram with descriptive statistics across each assessment. The total time spent grading by each member of the instructional team is calculated by Crowdmark. The return interval – how long it takes to evaluate and return an assessment– is measured by Crowdmark. Exams that are returned quickly to students are known to generate improved learning outcomes compared to exams that are returned after a longer time. The Crowdmark team is developing tools at the assessment level that will give new insights into grading team performance. Other visualizations into the scores data are also in development.

Course Level

The arc of a course involves a sequence of assessments. Crowdmark is developing a granular multidimensional suite of assessment analytics that will allow instructors and students to monitor learning achievement over time. The timing data collected by Crowdmark can be used to accurately quantify total time (and labor cost) spent on grading in a course. Our team is exploring correlations of scores on each question with the final score in courses. We aim to help instructors identify questions on assignments and exams given early in the term that may serve as diagnostic indicators of expected final score in a course. These “canary in a coal mine” questions will allow instructors to identify students at risk of failing and intervene to help these students succeed. Crowdmark is investigating text analysis methods that can be applied to the comments left by the grading team to generate new insights into student learning and grading team effectiveness on a question-by-question basis across an entire course.

Eventually, Crowdmark will build higher level analysis tools enabling comparisons between different cohorts within a program or faculty. With input from engineering schools, we are developing tools to sidestep the cumbersome task of assembling documents for ABET and CEAB accreditation reviews by building an insights dashboard that continuously measures program and cohort performance at achieving learning objectives and meeting accreditation criteria.

Inspired by feedback from professors, graders, students, and administrators using our platform, the team at Crowdmark continues to deliver improvements that transform traditional assessment into an enriched dialogue between students and instructional teams.

| Level | Learning | Instruction |

|---|---|---|

| Question | Score | Grading Timing |

| Assessment | Histogram | Return Interval |

| Course | Heat Map | Feedback Analysis |

| Sequence | Correlations | Intervention |

| Program | Cohort Comparisons | Curriculum Innovation |

| Faculty | Learning Outcomes | Accreditation and Certification |